Using Lobe Chat Translator¶

Lobe Chat is an open-source modern AI chat framework. It supports multiple AI providers (OpenAI/Claude 3/Gemini/Ollama/Qwen/DeepSeek), knowledge bases (file uploads/knowledge management/RAG), and multi-modality (visual/TTS/plugins/art). Deploy your private ChatGPT/Claude application for free with one click.

Install Lobe Chat¶

For detailed installation instructions, please refer to the official documentation of Lobe Chat. Lobe Chat offers various deployment and installation methods.

This guide uses Docker as an example, primarily introducing how to use d.run's model service.

# LobeChat supports configuring API Key and API Host directly during deployment

$ docker run -d -p 3210:3210 \

-e OPENAI_API_KEY=sk-xxxx \ # Enter your API Key

-e OPENAI_PROXY_URL=https://chat.d.run/v1 \ # Enter your API Host

-e ENABLED_OLLAMA=0 \

-e ACCESS_CODE=drun \

--name lobe-chat \

lobehub/lobe-chat:latest

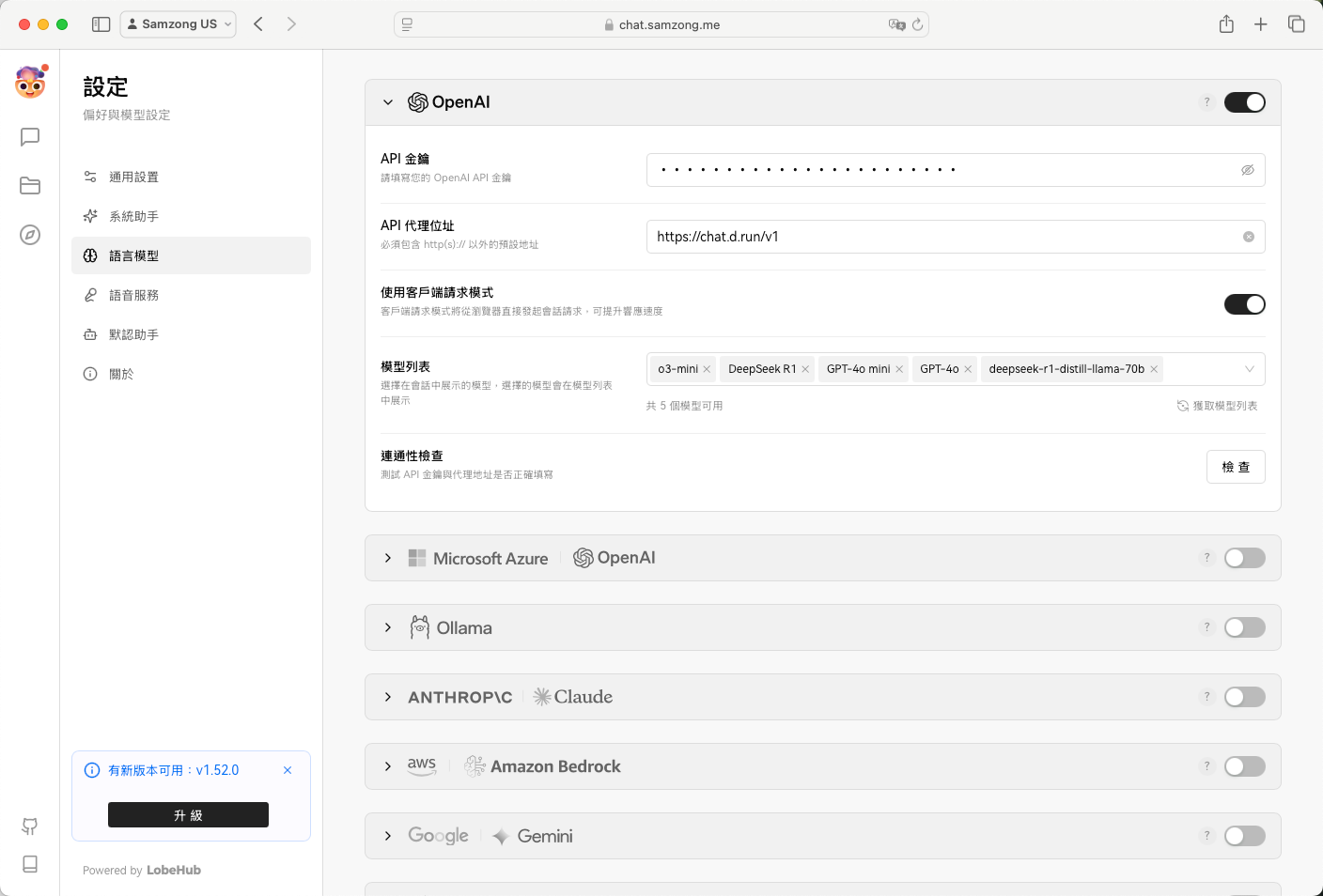

Configure Lobe Chat¶

Lobe Chat also allows users to add model service provider configurations after deployment.

Enter the API Key and API Host obtained from d.run.

- API Key: Enter your API Key

- API Host:

- For MaaS, use

https://chat.d.run - For independently deployed models, check the model instance details, typically

https://<region>.d.run

- For MaaS, use

- Configure custom models: e.g.,

public/deepseek-r1

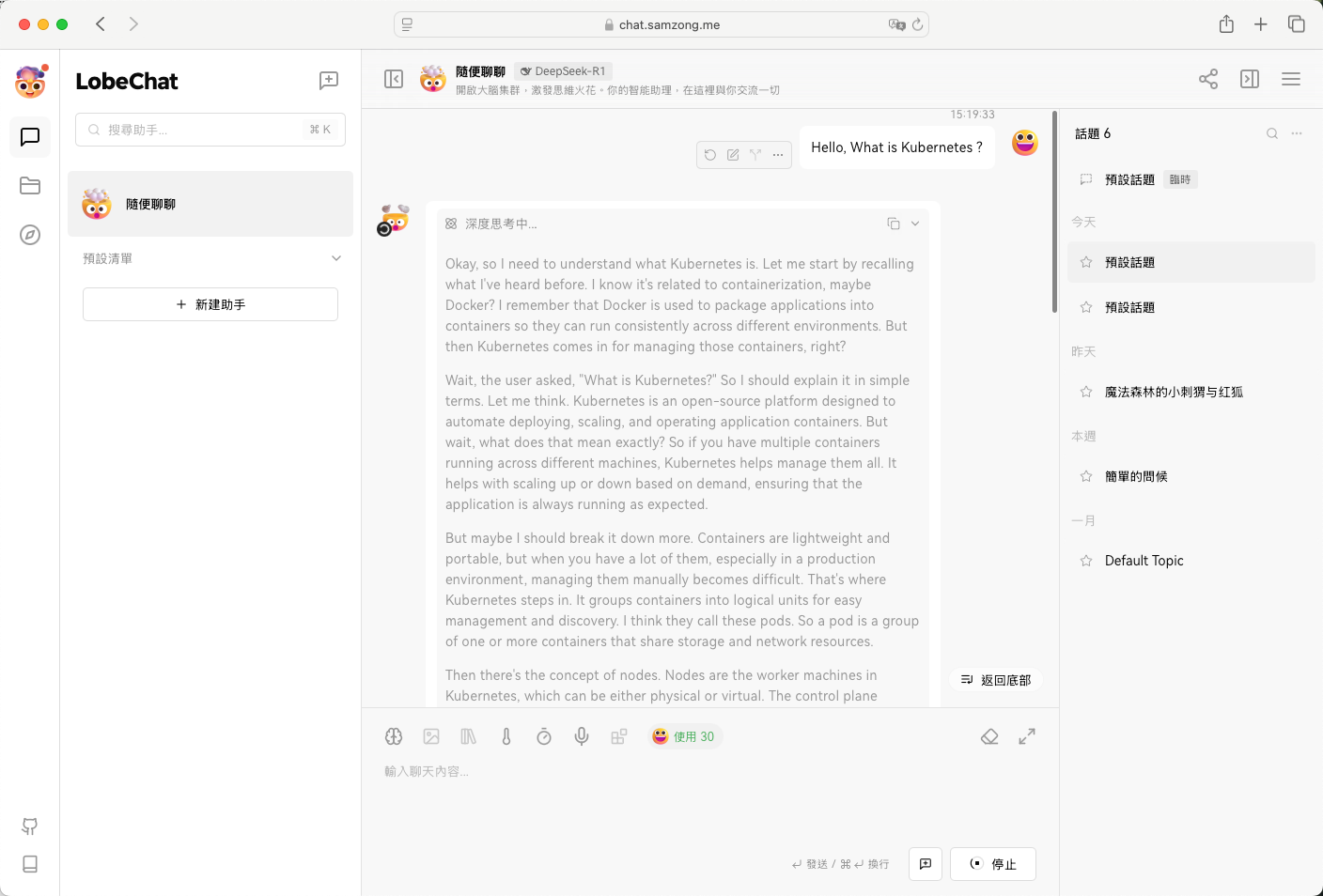

Lobe Chat Usage Demo¶